Ashley Cook

January 24, 2022

Today, Daniel Sharp, also known as madeofants, releases his EP Lost & Found. With this music, Sharp also presents a video for the song “cyberhellravedungeonfunruncore (Marshall Applewhite’s Kids Today Get Distracted Easily Mix)” that was made in collaboration with Joel Dunn. This music, which is both animated by, and the source of animation for the video, is officially released by Junted (https://junted.bandcamp.com/), a Detroit-based music label founded by Dunn in 2017 that releases what they call “gloriously primitive music”.1 This description feels accurate when browsing the Junted catalogue, with much of the work having a high focus on drumming and tones that seem to preserve the character of music’s most early stages. Much of this work ignores linear time and instead spans eras, connecting ancient creative methods with new technologies. Recalling sound-tracks of science fiction movies, the work of these artists participate in their most poignant conversations, where eras coexist as a reminder of our mortality, malleability, vulnerability and wildness.This is demonstrated through both the audio as well as the coinciding visuals. You will find a high use of 3-D rendered forms that teeter between human, alien and machine while browsing most of the Junted releases. This is perpetuated further by this newest EP release and video by madeofants.

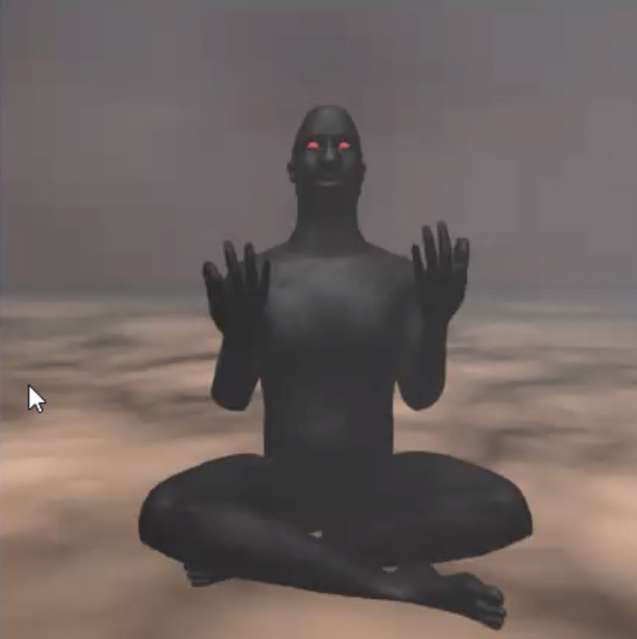

The interdependent relationship between these particular videos and the music was done with a video technique that “uses audio maps to create latent interpolation while generating images from an AI brain”.2 In layman’s terms, this technique allows the images presented in the videos to be manipulated, stimulating them to change from one image to the next in response to the music. To add another layer of complexity, the imagery presented in these videos were generated by an AI brain using the technique of StyleGAN. “Generative Adversarial Networks are effective at generating high-quality and large-resolution synthetic images. The generator model takes as input a point from latent space and generates an image. This model is trained by a second model, called the discriminator, that learns to differentiate real images from the training dataset from fake images generated by the generator model. As such, the two models compete in an adversarial game and find a balance or equilibrium during the training process. Many improvements to the GAN architecture have been achieved through enhancements to the discriminator model. These changes are motivated by the idea that a better discriminator model will, in turn, lead to the generation of more realistic synthetic images.”3

Through the process of training the AI brain using the Style GAN technology, Sharp took an image set of 1,000 images of fake people that were generated by AI prior to this production. He input them into the AI brain and provided “real images” for the brain to reference in order to manipulate the pre-made images of the fake people with the goal of making them look “more real”. StyleGAN was developed in order to generate artificial imagery that looks real; this often comes in handy for identification purposes in law enforcement, but is also used for purposes of advertising to preserve the identities of actual live people.4

As a creative medium however, the “real” images uploaded by Sharp for these videos were 3-D renders of alien-like figures and wirey forms created by Dunn. With each new attempt at further “training the brain”, the fake AI people are mixed with these 3-D renders, resulting in bizarre figures that serve primarily as outlets for awe. Not only does this approach lead to extremely interesting visuals, but it required Sharp and Dunn to learn how to use this contemporary AI technology, understand it and bring it to the forefront for viewers to become aware of. With the technology being used in a slightly more obscure way than typically used in the “real world”, it not only brings up the conversation of the incessantly growing influence that AI is having on our perception of reality on a daily basis, but also how easy it can be to influence.

To learn more about madeofants, you can access their Soundcloud here: https://soundcloud.com/madeofants

1 Junted, last modified 2022, https://junted.bandcamp.com/.

2 Daniel Sharp, interview by Ashley Cook, Detroit, MI, January 9, 2022

3 Jason Brownlee, “A Gentle Introduction to StyleGAN, the Style Generative Adversarial Network,“ August 19, 2019, Machine Learning Mastery, https://machinelearningmastery.com/

4 Zhigang Li, Yupin Luo, “Generate Identity-Preserving Faces by Generative Adversarial Networks,“ Department of Automation at Tsinghua University, June 25, 2017, https://arxiv.org/pdf/1706.03227.pdf